Reflection of the week: Fama.io vs Twitter

By Jesse van der Merwe,

Foreword

I used flex display and some other cool things from this week's lecture, Thanks Lucky!!! :D

P.S.

I had to remove the flex display because it was causing issues...

but now there's more issues with grid display...

sigh...

We continue to live and learn.

This week's blog post required me to look at the list of Tweets in the Theory Lecture Page. The one that grabbed my attention was a tweet by user who said:

The reason this grabbed my attention was actually due to the lack of engagement that the Tweet had. I thought that such an interesting topic would have more people talking about it, and yet this particular tweet have very little responses, retweets and/or likes. I decided to do more research.Fama.io is further proof that AI doesn't have to be smart to be dangerous. Bad assumptions at scale

- Phatho (@Phyxtho) February 2, 2020

What is Fama.io?

According to Fama.io website, Fama is the smartest way to screen toxic workplace behaviour as it is a talent screening software that helps identify problematic behaviour among potential hires and current employees by analysing publicly available online information. Below are a few more descriptions taken directly from the Fama.io website:Compliant, AI-based online screening for the enterprise.

Our technology helps businesses identify thousands of job-relevant behaviours, such as racism or harassment, without exposing hiring managers to unnecessary risk or manual work.

Full Customization

Surface behaviours that conflict with your company’s mission and values using our customizable screening platform.

Legal Protection

Fama only highlights behaviours which you have a permissible or business purpose to view, and is a Consumer Reporting Agency.

Automated and Efficient

Escalate only the most critical reports for human review and adjudication, all driven by your company’s unique risk matrix.

Extensive Online Screening

Fama screens the entire publicly available web. Whether it is social media, web results, or paid subscription databases, you can build a package to fit your needs.

Customized Reporting

Fama does not score or provide a recommendation on a person, instead we give you the power to determine which behaviours you want to see flagged on a report.

Ongoing Monitoring

Risk doesn’t stop at the point of hire. We provide solutions, some anonymized and aggregated, to help you identify risk within your workforce.

Next Generation Efficiency

A trained investigator analysing a person’s online footprint can take 8-10 hours. It takes ten seconds to add a candidate via our web portal.

FCRA, GDPR and EEOC Compliant

Fama considers itself a Consumer Reporting Agency and abides by all applicable local, state, and federal regulations.

Consent-based Screening

Fama is a consent-based solution and offers candidates the right to challenge results in the case of a pre-adverse action.

Triple Authentication

Fama triple authenticates profiles via a proprietary method of combining best-in-class AI and trained investigators into a single workflow.

What Fama finds...

Our machine learning technology has flagged hundreds of thousands of instances of misogyny, bigotry, racism, violence and criminal behaviour in publicly available online content.

I am aware that the above points are directly from Fama itself, and thus only describe the positive points and advantages about their AI software. Further digging found some more interesting points about the AI software. According to First Advantage’s blog [1],

“According to CareerBuilder, 60% of organizations are using public social media or online searching to screen job applicants in 2016. However, most of those companies do it manually.”Thus, one of the biggest advantages of using Fama instead of manual social media background checks, is that of saving time. If the check is done manually, it can take hours of someone’s time, while it will only take a few seconds for the Fama software.

Another advantage includes the fact that it requires significant effort for a human to accurately confirm an identity online since there’s often more than one individual with the same name [1], whereas Fama assures users that the software is programmed to always find the correct person. However, this became a point of contention.

Some companies wish to remove the liability from their Human Resources (HR) department by using Fama instead of asking the HR employees to perform manual Social Media background checks.

“If you make a hire and it turns out they were posting sexist, racist and other lunacy online… that is not only a liability for an employer, it also calls into question your ability of making a hiring decision,” said Alex Granovsky, an employment lawyer in New York City [3].

“Without a standardized, automated process for accessing, viewing, evaluating, documenting and protecting social media data, organizations can quickly land in hot water. Here’s why: the same rules that apply to other forms of background checks – think: Fair Credit Reporting Act (FCRA) -, the EEOC – apply to social media screening” [1].It is illegal for an employer to discriminate against a potential employee based on race, religion, sex, gender, national origin, age, disability, etc. Thus, in my opinion, if a company is trying to reduce the amount of discrimination within an internal hiring process, then the FIRST process should be to ensure that all members of the HR department are NOT racist, sexist or discriminatory in any way mentioned above, BEFORE using Fama.

However, on the above point, I think that using software like Fama could definitely help reduce unconscious bias in the employment process. If an employer is manually performing the social media background check and accesses personal public information, this may cause unconscious biases made against the person. This is simply because you cannot "unsee" information. Pamela Daveta, a labour and employment law attorney with Seyfarth Shaw, LLC, USA, mentions that the Equal Employment Opportunity Commission (EEOC) assumes that if you accessed the information, you used it in your employment decision[1]. This has landed a few HR employers in legal hot water.

I also discovered that entire organizations can also land in hot legal waters:

"Without a standardized, automated process for accessing, viewing, evaluating, documenting and protecting social media data, organizations can quickly land in hot water. Here’s why: the same rules that apply to other forms of background checks – think: Fair Credit Reporting Act (FCRA), the EEOC – apply to social media screening[1]."

Fama CEO and co-founder Ben Mones said that employers aren’t that interested in recreational vices like alcohol use, but instead are focused on keeping bullies, misogynists and bigots out of their workplace. Fama even go as far as to have a case study about Alcohol On An Entry-Level Job Candidate's Social Media.

Fama has reported that while most of the screens are for potential hires, there has recently been a large increase in the number of companies running checks on current workers. Some people were now concerned that Fama would be used to enforce sexist/racist/etc. discriminatory views in a legal way. This is voiced by M. Cook, in their article "A Basic Lack of Understanding":

Whether or not AI is really useful, there’s no doubt that the belief that AI works is going to change our lives. For people who are already being exploited, AI will most likely be used to amplify this exploitation. For example, employers no longer need to snoop on the social media accounts of job applicants or employees; services like Fama use AI to automate this process, enabling mass surveillance of workers’ online posts and flagging things that might assist in making hiring, firing, and promotion decisions. This forces workers to either moderate their entire online presence or make all interactions private. Even more importantly, though, services like this can be used to provide a veneer of impartial logic over prejudiced managerial decisions. By sending systems like Fama after employees the company already wish to dismiss or avoid hiring for illegal reasons (decisions based on race or gender, for example) it’s easy to find justifications that come from a computer - something perceived as impartial and rational [4].

Even though we like to frame AI as making ‘decisions’ for companies, they lack many features that humans would have in the same position: algorithms can’t protest, have a change of heart, leak information, or make judgement calls. They simply do what they’re told, which makes their capacity to amplify power while simultaneously defending it from criticism extremely worrying.

It is illegal for an employer to discriminate against a potential employee based on race, religion, sex, gender, national origin, age, disability, etc. and Fama claim to be very aware of this - their software is not able to allow any company to interact with any of these aforementioned classes of information. However, I believe the concern is not whether the software itself can be used in a racist/sexist/etc. way, but instead that it can be used to manually target a particular person. For example, a company could run the Fama software on only the woman candidates to ensure that 'dirt' is dug up on each of them, thus allowing the company to 'legitly' not hire any of these woman as apposed to the men candidates. Fortunately I am pretty sure this is illegal (as mentioned above) since the same rules that apply to other forms of background checks, apply to social media screening[1]. In other words, while this is a valid concern for candidates to have, it is not a valid criticism of Fama itself.

Companies using Fama are legally obligated to share the report with job candidates and employees to contest or explain the results [2], which counters the argument that many Twitter users have of being rejected by a company for ‘no apparent reason’. However, this 'obligation' of sharing the Fama report resulted in a very entertaining Twitter thread from user "bruise almighty" (@kmlefranc).

The first two Tweets give some background to the situation:

I had to get a background check for my job, and it turns out the report is a 300+ page pdf of every single tweet I’ve ever liked with the work “fuck” in it.

UPDATE: I came home to a package containing a *printout* of all 351 pages of it! Obv the dystopia cares about wasting paper.The user then shares some images of the results of the report, shown below with the user's original captions:

To those asking- I did not give them my handle or permission, I'm assuming they just found this via my (old) name.

The especially creepy part is this didn't turn up anything at all relevant or incriminating! I keep personal info on my non-public accounts. But their shitty algorithm means that my "reputation" and "character" is flagged as questionable and sent to my boss.

This entire Twitter thread made me chuckle, and also highlighted the main issue that I see with Fama being used as the only form of social media screening:

If the AI used by Fama is not artificially intelligent enough to pick up on the more subtle human

emotions such as sarcasm... THEN WHY ON EARTH WOULD YOU USE IT TO SCAN THROUGH TWEETS ON

TWITTER!?!

(aka the human cesspit of sarcasm)

- Saves the business LOTS of time

- Takes the human-employee out of the screening process to prevent unconscious bias

- Appears to not allow businesses to search or even consider information such as race/sex/gender/etc.

- The fact that Fama is "focused on keeping bullies, misogynists and bigots out of their workplace" sounds pretty legit.

- Businesses must just ensure to read through the entire report to make sure that Twitter sarcasm (and other examples of human emotion that AI doesn't understand) are not used against the potential employee.

But then...

I came across a particular Fama.io blog...

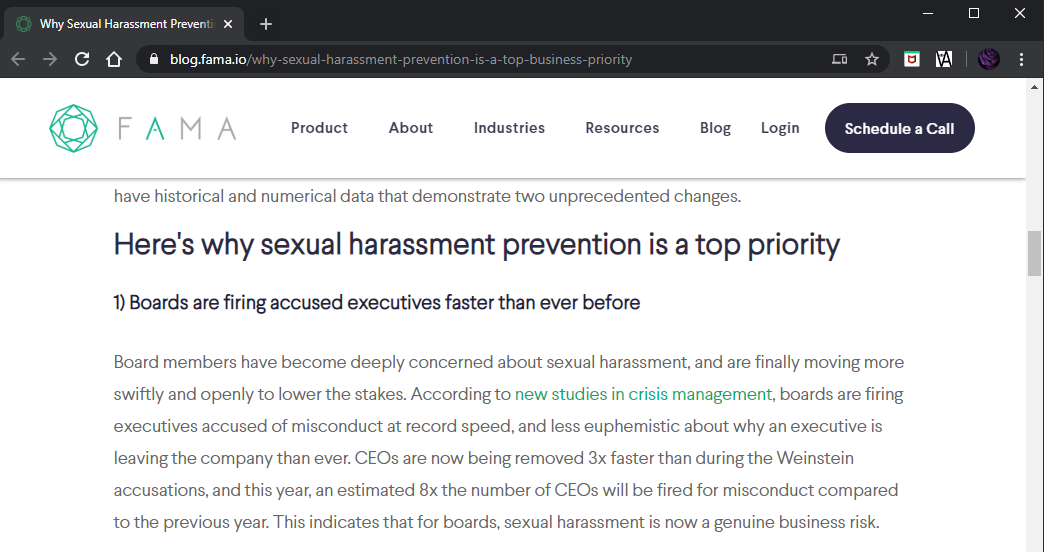

Which lists the two reasons why sexual harassment prevention is now a top business priority...

...

THE FACT THAT BOARDS ARE FIRING EXECS AND TAKING BACK MONEY IS A GOOD FUCKING THING!!!???

BECAUSE THESE EXECS ARE

DISCUSTING PIGS THAT DESERVE TO GO TO JAIL??!!??!!

?!?

Perhaps a better reason for prioritizing the prevention of sexual harassment in the work place could

include the fact that sexual harassment inflicted by one person upon another person at ANY place (let

alone the workplace) is immoral, unethical, illegal, disgusting, revolting, repulsive, sickening,

nauseating, unsavoury, foul, outrageous, horrifying, monstrous, heinous, abhorrent, detestable,

deplorable, inexcusable, etc. etc. etc.

Besides the fact that being sexually harassed can impact the psychological health, physical well-being

and vocational development of the victim.

I am concerned and no longer "pro-Fama".

References

- First Advantage, “Is Social Media Screening Helping, or Hurting, Your Hiring Process?,” First Advantage, 1 November 2016. [Online]. Available: https://fadv.com. [Accessed 26 May 2020].

- K. Vasel, “This company uses AI to flag racist and sexist comments from potential hires,” CNN Business, 12 April 2019. [Online]. Available: https://edition.cnn.com. [Accessed 26 May 2020].

- J. Pettitt, “The tech that hiring managers are using to screen all of your social media posts,” CNBC, 14 October 2016. [Online]. Available: https://www.cnbc.com. [Accessed 26 May 2020].

- M. Cook, “A Basic Lack of Understanding // Notes from Below,” Notes from Below, 30 March 2018. [Online]. Available: https://notesfrombelow.org. [Accessed 26 May 2020].

- Center for Victim Advocacy and Violence Prevention, "Sexual Harassment", 22 July 2010. [Online]. Available: https://www.usf.edu [Accessed 26 May 2020].